Any equipment, including server equipment, sometimes begins to work unpredictably. It doesn’t matter at all whether this equipment is new or has been operating at full capacity for several years.

There are many cases of failure and incorrect operation, and diagnosing the problem often turns into a fascinating puzzle.

Below we will talk about some interesting and non-trivial cases.

Troubleshooting

Problems are most often registered after clients contact the service. technical support through the ticket system.If a client contacts us and rents fixed-configuration dedicated servers from us, we conduct diagnostics to find out that the problem is not software in nature.

Clients usually solve software problems on their own, however, in any case, we try to offer help from our system administrators.

If it becomes clear that the problem is hardware (for example, the server does not see part RAM), then in this case we always have a similar server platform in reserve.

If a hardware problem is identified, we transfer the disks from the failed server to the backup one and, after a little reconfiguration, network equipment, the server is being launched. Thus, data is not lost, and downtime does not exceed 20 minutes from the moment of access.

Examples of problems and solutions

Network failure on the server

There is a possibility that after transferring disks from a failed server to a backup one, the network on the server will stop working. This usually happens when using Linux operating systems, such as Debian or Ubuntu.The fact is that during the initial installation of the operating system, the MAC addresses network cards are written to a special file located at: /etc/udev/rules.d/70-persistent-net.rules.

When the operating system starts, this file maps interface names to MAC addresses. When replacing the server with a backup one, the MAC addresses of the network interfaces no longer match, which leads to network inoperability on the server.

To solve the problem you need to remove specified file and restart the network service, or restart the server.

The operating system, not finding this file, will automatically generate a similar one and match the interfaces with the new MAC addresses of the network cards.

There is no need to reconfigure IP addresses after this, the network will start working immediately.

Floating freezing problem

One day, a server came to us for diagnostics with the problem of random freezes during operation. We checked the BIOS and IPMI logs - empty, no errors. We put it under stress testing, loading all processor cores at 100%, while simultaneously monitoring the temperature - it froze dead after 30 minutes of operation.At the same time, the processor worked normally, temperatures did not exceed standard values under load, all coolers were in good working order. It became clear that the issue was not overheating.

Next, it was necessary to exclude possible failures of the RAM modules, so we put the server to a memory test using the fairly popular Memtest86+. After about 20 minutes, the server crashed as expected, producing errors on one of the RAM modules.

Having replaced the module with a new one, we tested the server again, but a fiasco awaited us - the server froze again, generating errors for a different RAM module. They replaced him too. Another test - it froze again, again generating errors in the RAM. A careful inspection of the RAM slots did not reveal any defects.

There was only one possible culprit left - CPU. The fact is that the RAM controller is located inside the processor and it was this controller that could fail.

Having removed the processor, we discovered a disaster - one pin of the socket was broken in the upper part, the broken tip of the pin was literally stuck to the contact pad of the processor. As a result, when there was no load on the server, everything worked adequately, but when the processor temperature increased, the contact was broken, thereby stopping the normal operation of the RAM controller, which caused freezes.

The problem was finally solved by replacing the motherboard, since, alas, we are unable to restore the broken socket pin, and this is already a task for the service center.

Imaginary server freeze during OS installation

Quite funny cases arise when equipment manufacturers begin to change the hardware architecture, abandoning support for old technologies in favor of new ones.A user contacted us with a complaint about the server freezing when trying to install an operating system. Windows systems Server 2008 R2. After successfully running the installer, the server stopped responding to the mouse and keyboard in the KVM console. To localize the problem, we connected a physical mouse and keyboard to the server - everything is the same, the installer starts and stops responding to input devices.

At that time, this server was one of the first we had based on the X11SSL-f motherboard manufactured by Supermicro. IN BIOS settings There was one interesting item Windows 7 install, set to Disable. Since Windows 7, 2008 and 2008 R2 are deployed on the same installer, we set this parameter to Enable and miraculously the mouse and keyboard finally started working. But this was only the beginning of the epic with the installation of the operating system.

At the time of selecting a disk for installation, not a single disk was displayed; moreover, an installation requirement error was displayed additional drivers. The operating system was installed from a USB flash drive and quick search on the Internet showed that this effect occurs if the installation program cannot find drivers for USB controller 3.0.

Wikipedia reported that the problem is solved by turning off BIOS support USB 3.0 (XHCI controller). When we opened the documentation for the motherboard, a surprise awaited us - the developers decided to completely abandon the EHCI (Enhanced Host Controller Interface) controller in favor of XHCI (eXtensible Host Controller Interface). In other words, all USB ports on this motherboard are USB ports 3.0. And if we disable the XHCI controller, then we will also disable the input devices, making it impossible to work with the server and, accordingly, install the operating system.

Since the server platforms were not equipped with drives for reading CD/DVD discs, the only solution to the problem was to integrate the drivers directly into the operating system distribution. Only by integrating the USB 3.0 controller drivers and rebuilding the installation image were we able to install Windows Server 2008 R2 on this server, and this case was included in our knowledge base so that engineers would not waste unnecessary time on fruitless attempts.

Even funnier are the cases when clients bring us equipment for placement, and it does not behave as expected. This is exactly what happened with the Dell PowerVault line of disk shelves.

The device is a data storage system with two disk controllers and network interfaces for working via the iSCSI protocol. In addition to these interfaces, there is an MGMT port for remote control.

Among our services for hosted equipment there is a special service “Additional 10 Mbit/s port”, which can be ordered if it is necessary to connect remote server management tools. These funds go by different names:

- "iLO" from Hewlett-Packard;

- "iDrac" from Dell;

- IPMI from Supermicro.

To limit the speed, the port is simply configured as 10BASE-T and enabled with maximum speed at 10 Mbit/s. After everything was ready, we connected the MGMT port of the disk shelf, but the client almost immediately reported that nothing was working for him.

After checking the status of the switch port, we discovered the unpleasant message “Physical link is down”. This message indicates that there is a problem with the physical connection between the switch and the client equipment connected to it.

A poorly crimped connector, a broken connector, broken wires in the cable - this is a small list of problems that lead specifically to the lack of a link. Of course, our engineers immediately took a twisted pair tester and checked the connection. All wires connected perfectly, both ends of the cable were crimped perfectly. In addition, by connecting a test laptop to this cable, we received a connection with a speed of 10 Mbit/s as expected. It became clear that the problem was on the client's equipment side.

Since we always try to help our clients solve problems, we decided to figure out what exactly causes the lack of a link. We carefully examined the MGMT port connector - everything is in order.

Found on the manufacturer's website original instructions manual to clarify whether it is possible for the software to “extinguish” this port. However, this possibility was not provided - the port was raised automatically in any case. Despite the fact that such equipment should always support Auto-MDI(X) - in other words, correctly determine which cable is connected: regular or crossover, for the sake of experiment, we crimped the crossover and included it in the same switch port. We tried to force the duplex parameter on the switch port. The effect was zero - there was no link and the ideas were already running out.

Here one of the engineers made a completely counterintuitive assumption that the equipment does not support 10BASE-T and will only work on 100BASE-TX or even 1000BASE-X. Typically, any port, even on the cheapest device, is compatible with 10BASE-T, and at first the engineer’s assumption was dismissed as “fiction,” but out of desperation they decided to try switching the port to 100BASE-TX.

Our surprise knew no bounds; the link instantly went up. What exactly causes the lack of 10BASE-T support on the MGMT port remains a mystery. Such a case is very rare, but it does happen.

The client was no less surprised than we were and was very grateful for solving the problem. Accordingly, they left the port in 100BASE-TX, limiting the speed on the port directly using the built-in speed limiting mechanism.

Cooling turbine failure

One day a client came to us and asked us to remove the server and move it to the service area. The engineers did everything and left him alone with the equipment. An hour passed, two, three - the client kept starting/stopping the server and we asked what the problem was.It turns out that two of the six cooling turbines on the Hewlett-Packard server failed. The server turns on, displays a cooling error and immediately turns off. In this case, the server hosts a hypervisor with critical services. To restore normal operation of services, it was necessary to perform an urgent migration virtual machines to another physical node.

We decided to help the client in the following way. Usually the server understands that everything is fine with the cooling fan simply by reading the number of revolutions. At the same time, of course, Hewlett-Packard engineers did everything to ensure that it was impossible to replace the original turbine with an analogue - non-standard connector, non-standard pinout.

The original of such a part costs about $100 and you can’t just go and buy it - you have to order it from abroad. Fortunately, we found a circuit with the original pinout on the Internet and found out that one of the pins is responsible for reading the number of engine revolutions per second.

The rest was a matter of technology - we took a couple of wires for prototyping (by chance we were at hand - some of our engineers are keen on Arduino) and simply connected the pins from the neighboring working turbines with the connectors of the failed ones. The server started and the client finally managed to migrate virtual machines and start services.

Of course, all this was done solely under the responsibility of the client, nevertheless, in the end, such a non-standard move made it possible to reduce downtime to a minimum.

Where are the disks?

In some cases, the cause of the problem is sometimes so non-trivial that it takes a very large amount of time to find it. This is what happened when one of our clients complained about random disk failure and server freezes. Hardware platform - Supermicro in case 847 (4U form factor) with cages for connecting 36 drives. The server had three identical Adaptec RAID controllers installed, each with 12 disks connected. When the problem occurred, the server stopped seeing a random number of disks and froze. The server was taken out of production and diagnostics began.The first thing we found out was that the disks were falling off on only one controller. At the same time, the “dropped drives” disappeared from the list in the native Adaptec management utility and reappeared there only when complete shutdown server power supply and subsequent connection. The first thing that came to mind was software controller. All three controllers had slightly different firmware, so it was decided to install the same firmware version on all controllers. We did it, ran the server in maximum load modes - everything works as expected. Having marked the problem as resolved, the server was returned to the client for production.

Two weeks later again with the same problem. It was decided to replace the controller with a similar one. We completed it, flashed it, connected it, put it to tests. The problem remained - after a couple of days, all the disks on the new controller fell out and the server safely froze.

We reinstalled the controller in another slot, replaced the backplane and SATA cables from the controller to the backplane. A week of testing and again the disks fell out - the server froze again. Contacting Adaptec support did not bring any results - they checked all three controllers and found no problems. Replaced motherboard, rebuilding the platform almost from scratch. Everything that caused the slightest doubt was replaced with new ones. And the problem reappeared. Mysticism and nothing more.

The problem was solved by accident when they started checking each disk individually. At a certain load, one of the disks began to knock its heads and gave short circuit to the SATA port, but there was no alarm indication. At the same time, the controller stopped seeing some of the disks and began to recognize them again only when the power supply was reconnected. This is how one single failed disk brought down the entire server platform.

Conclusion

Of course, this is only a small part of the interesting situations that were solved by our engineers. Some problems are quite difficult to “catch”, especially when there is no hint in the logs of the failure that occurred. But any such situations stimulate engineers to understand in detail the design of server equipment and find a wide variety of solutions to problems.These are the funny cases that happened in our practice.

Which ones have you encountered? Welcome to the comments.

As a rule, the average user associates concepts such as “web server” or “hosting” with something completely incomprehensible. Meanwhile, there is nothing complicated about this issue. We will try to explain what a web server is, why it is needed and how it works, without going into technical details, but, so to speak, on the fingers. Let us separately dwell on the question of how to create and configure such a server on a home computer terminal or laptop.

What is a web server?

The most important thing in this issue- understand that a server of this type is nothing more than a computer on the Internet with the appropriate software installed.

But this absolutely does not mean that you cannot create your own configuration at home. Since Windows operating systems are more common in our country, questions about how to create a web server on Ubuntu (Linux) will not be considered.

What are web servers for?

This type of server stores a lot of information on the Internet. At the same time, the same antiviruses turn to them to update their own databases. The user also has a direct relationship with such servers by making requests in the browser (searching for information, accessing a page, etc.).

So it turns out that all the pages present on the Internet are stored on web servers, to which, on the one hand, a user request or access is made installed program, and on the other hand, the result is produced by the very server to which the attempt is made to access.

How does it all work?

All users are accustomed to the fact that to enter some resource on the Internet (web page) on which information of a certain type is located, the prefix www (or http) and the subsequent name are simply entered in the address bar. But no one thinks about how the web server understands the request and produces the result.

In fact, here we need to distinguish between the concepts of server and client. In our case, the page posted on the Internet is saved exactly on remote server. The user's computer acts as a client from which the call is made.

To access the Internet, programs called web browsers are used. They translate the user request into a digital code that the web server can recognize. The server processes it and produces a response in the appropriate code, and the browser already converts millions of zeros and ones into a normal form with text, graphic, sound or video information that is placed on the page.

The most popular web servers

Of all the server software, Apache and Microsoft IIS are considered to be the most common. The first is more popular and is mostly used on UNIX-like systems, although it can be installed on Windows environment. In addition, the Apache server is completely free software and is compatible with almost all known operating systems. However, as noted, this software is intended mainly for professional programmers and developers.

The Microsoft software product is designed for the average user, who can install and configure such a web server for Windows without the additional help of a qualified specialist.

However, based on official statistics, Apache software uses about 60% of all existing servers, so we will consider the issue of installing and configuring the initial configuration using its example.

Web server on a home computer: installation

To install, you will need to download a special server package, abbreviated as WAMP, which includes three main components:

- Apache- shell server, which can work independently, but only if there is no dynamic content on the hosted pages.

- PHP is a programming language used by add-ons to manage dynamic content servers like WordPress, Joomla, Drupal.

- MySQL is a unified database management system, used, again, when creating sites with dynamic content.

Installation can be done from the WampServer package. To do this, just follow the instructions of the “Wizard”, who at one stage will offer to select the Internet browser that will be used by default.

To do this, you will need to go to the folder with the browser executable file (if this is not Internet Explorer, it is usually located in the Program Files directory). At the same time, the browser itself should be added to the Windows Firewall exceptions list. At the final stage, check the box next to the immediate launch item, after which a corresponding icon will appear in the system tray, which you need to click on and change to select launch localhost.

If everything is done correctly, the server home page will appear. Next, you will be prompted to install additional components (if this is not done, the system will generate an error). Basically, the installation concerns additional add-ons, elements and components that will be used by the server in the future.

Example of setting up and testing a server

Setting up a web server is a little more complicated. First, in the system tray menu, select the WWW folder (the place where add-ons or HTML files are stored). After that, write the following text in Notepad:

Hello!

"; ?>You can simply copy the text into Notepad and save the file under the name index.php in the same WWW folder (although you can do without it, since this step is used solely for checking the local host). Instead of a greeting, you can insert any other text or phrase.

Then you need to refresh the page in the browser (F5), after which the content will be displayed on the screen. But the page will not be accessible to other computers.

To open access, you need to change the httpd.conf file, writing in the section that begins with

Order Allow, Deny

Instead of an afterword

Of course, with regard to understanding the essence of the functioning or settings of a home web server, only the most initial and brief information is provided here, so to speak, for a general understanding. In fact, all processes are much more complex, especially in terms of converting requests and issuing responses, not to mention setting up a server at home. If the user wants to understand these issues, they cannot do without at least basic knowledge of the same WordPress add-on and the PHP language. On the other hand, for publishing primitive pages containing mostly only text information, you can use this initial information.

What is a web server? From the point of view of the average person, this is a kind of black box that processes browser requests and produces web pages in response. The technician will bombard you with a lot of obscure terms. As a result, it is sometimes difficult for novice web server administrators to understand the variety of terms and technologies. Indeed, the field of web development is developing dynamically, but many modern solutions are based on basic technologies and principles, which we will talk about today.

If you don’t know where to start, then you have to start over. In order not to get confused in all the variety of modern web technologies, you need to turn to history to understand where it began modern internet and how technology has developed and improved.

HTTP server

At the dawn of the development of the Internet, sites were a simple repository of specially marked documents and some associated data: files, images, etc. In order for documents to be able to refer to each other and related data, a special hypertext language was proposed. HTML markup, and to access such documents via the Internet, the HTTP protocol. Both the language and the protocol, developing and improving, have survived to this day without significant changes. And just beginning to replace the HTTP/1.1 protocol adopted in 1999, the HTTP/2 protocol carries dramatic changes taking into account the requirements of a modern network.

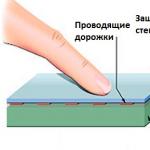

The HTTP protocol is implemented using client-server technology and operates on a stateless request-response principle. The purpose of the request is a certain resource, which is determined single resource identifier - URI (Uniform Resource Identifier), HTTP uses one of the URI varieties - URL (Uniform Resource Locator) - uniform resource locator, which, in addition to information about the resource, also determines its physical location.

The HTTP server's task is to process the client's request and either provide it with the required resource, or inform it that it is impossible to do so. Consider the following diagram:

The user, through an HTTP client, most often a browser, requests a certain URL from the HTTP server, the server checks and sends the corresponding URL file, usually an HTML page. The resulting document may contain links to related resources, such as images. If they need to be displayed on a page, then the client sequentially requests them from the server; in addition to images, style sheets, scripts executed on the client side, etc. can also be requested. Having received everything necessary resources the browser will process them according to the code of the HTML document and present the finished page to the user.

As many have already guessed, under the name of the HTTP server in this scheme there is an entity that is better known today as a web server. The main goal and task of a web server is to process HTTP requests and return their results to the user. The web server cannot generate content on its own and only works with static content. This is also true for modern web servers, despite all the richness of their capabilities.

For a long time, one web server was enough to implement a full-fledged website. But as the Internet grew, the capabilities of static HTML became sorely lacking. A simple example: each static page is self-sufficient and must contain links to all resources associated with it; when adding new pages, links to them will need to be added to existing pages, otherwise the user will never be able to get to them.

The sites of that time generally bore little resemblance to modern ones, for example, below is a view of one of the pioneers of the Russian-language Internet, the site of the Rambler company:

And clicking on any of the links can generally confuse the modern user; it is not possible to return back from such a page except by pressing the button of the same name in the browser.

And clicking on any of the links can generally confuse the modern user; it is not possible to return back from such a page except by pressing the button of the same name in the browser.

An attempt to create something more or less similar to a modern website very soon turned into an increasing amount of work to make changes to existing pages. After all, if we changed something in the general part of the site, for example, the logo in the header, then we need to make this change on all existing pages. And if we changed the path to one of the pages or deleted it, then we will need to find all the links to it and change or delete them.

An attempt to create something more or less similar to a modern website very soon turned into an increasing amount of work to make changes to existing pages. After all, if we changed something in the general part of the site, for example, the logo in the header, then we need to make this change on all existing pages. And if we changed the path to one of the pages or deleted it, then we will need to find all the links to it and change or delete them.

Therefore, the next step in the development of web servers was technology support server side enablers - SSI (Server Side Includes). It made it possible to include the contents of other files in the page code, which made it possible to remove repeating elements such as the header, footer, menu, etc. into separate files and simply include them during the final assembly of the page.

Now, to change a logo or menu item, changes will need to be made in just one file, instead of editing all existing pages. In addition, SSI made it possible to display some dynamic content on pages, for example, the current date and perform simple conditions and work with variables. This was a significant step forward, making the work of webmasters easier and improving the convenience of users. However, these technologies still did not allow us to implement a truly dynamic website.

Now, to change a logo or menu item, changes will need to be made in just one file, instead of editing all existing pages. In addition, SSI made it possible to display some dynamic content on pages, for example, the current date and perform simple conditions and work with variables. This was a significant step forward, making the work of webmasters easier and improving the convenience of users. However, these technologies still did not allow us to implement a truly dynamic website.

It is worth noting that SSI is still actively used today, where some static content needs to be inserted into the page code, primarily due to its simplicity and low requirements for resources.

CGI

The next step in the development of web technology was the emergence special programs(scripts) that process user requests on the server side. Most often they are written in scripting languages, initially it was Perl, today PHP holds the leadership palm. Gradually, a whole class of programs emerged - content management systems - CMS (Content management system), which represent full-fledged web applications capable of providing dynamic processing of user requests.

Now important point: web servers did not and do not know how to execute scripts; their task is to serve static content. Here a new entity comes onto the scene - an application server, which is an interpreter of scripting languages and with the help of which web applications written in them run. DBMSs are usually used to store data, due to the need to access a large amount of interrelated information.

However, the application server cannot work with the HTTP protocol and process user requests, since this is the task of the web server. To ensure their interaction, it was developed common gateway interface - CGI (Common Gateway Interface).

It should be clearly understood that CGI is not a program or a protocol, it is precisely an interface, i.e. a set of ways of interaction between applications. Also, the term CGI should not be confused with the concept of a CGI application or CGI script, which denote a program (script) that supports work through the CGI interface.

It should be clearly understood that CGI is not a program or a protocol, it is precisely an interface, i.e. a set of ways of interaction between applications. Also, the term CGI should not be confused with the concept of a CGI application or CGI script, which denote a program (script) that supports work through the CGI interface.

Standard input/output streams are used to transfer data; data is transferred from the web server to the CGI application via stdin, are accepted back through stdout, used to transmit error messages stderr.

Let us consider the process of operation of such a system in more detail. Having received a request from the user's browser, the web server determines that dynamic content is requested and generates a special request, which it sends to the web application through the CGI interface. When it is received, the application runs and makes a request, which results in the HTML code of a dynamically generated page, which is passed back to the web server, after which the application exits.

Another important difference between a dynamic site is that its pages do not physically exist in the form that is presented to the user. In fact, there is a web application, i.e. a set of scripts and templates, and a database that stores site materials and official information, static content is located separately: pictures, java scripts, files.

Having received a request, the web application retrieves data from the database and fills it with the template specified in the request. The result is sent to the web server, which supplements the page generated in this way with static content (images, scripts, styles) and sends it to the user’s browser. The page itself is not saved anywhere, except in the cache, and when a new request is received, the page will be re-generated.

The advantages of CGI include language and architectural independence: a CGI application can be written in any language and work equally well with any web server. Given the simplicity and openness of the standard, this has led to the rapid development of web applications.

However, in addition to its advantages, CGI also has significant disadvantages. The main one is the high overhead costs of starting and stopping the process, which entails increased requirements for hardware resources and not high performance. And using standard I/O streams limits scalability and high availability because it requires the web server and application server to reside on the same system.

At the moment, CGI is practically not used, as it has been replaced by more advanced technologies.

FastCGI

As the name suggests, the main goal of developing this technology was to improve CGI performance. As its further development, FastCGI is a client-server protocol for interaction between a web server and an application server, providing high performance and security.

FastCGI eliminates the main problem of CGI - restarting the web application process for each request; FastCGI processes are running constantly, which allows you to significantly save time and resources. For data transmission, instead of standard streams, we use UNIX sockets or TCP/IP, which allows you to host the web server and application servers on different hosts, thus ensuring scalability and/or high availability of the system.

We can also run several FastCGI processes on one computer, which can process requests in parallel, or have different settings or versions of the scripting language. For example, you can simultaneously have several versions of PHP for different sites, directing their requests to different FastCGI processes.

We can also run several FastCGI processes on one computer, which can process requests in parallel, or have different settings or versions of the scripting language. For example, you can simultaneously have several versions of PHP for different sites, directing their requests to different FastCGI processes.

Process managers are used to manage FastCGI processes and load distribution; they can be either part of the web server or separate applications. Popular web servers Apache and Lighttpd have built-in FastCGI process managers, while Nginx requires an external manager to work with FastCGI.

PHP-FPM and spawn-fcgi

External managers for FastCGI processes include PHP-FPM and spawn-fcgi. PHP-FPM was originally a set of patches for PHP from Andrey Nigmatulin, which solved a number of issues in managing FastCGI processes, starting from version 5.3 it is part of the project and is included in the PHP distribution. PHP-FPM can dynamically manage the number of PHP processes depending on the load, reload pools without losing requests, emergency restart of failed processes and is a fairly advanced manager.

Spawn-fcgi is part of the Lighttpd project, but is not part of the web server of the same name; by default, Lighttpd uses its own, simpler, process manager. The developers recommend using it in cases where you need to manage FastCGI processes located on another host, or require advanced security settings.

External managers allow you to isolate each FastCGI process in its own chroot (changing the root directory of the application without the ability to access beyond it), which is different from both the chroot of other processes and the chroot of the web server. And, as we have already said, they allow you to work with FastCGI applications located on other servers via TCP/IP, in the case local access you should choose access via a UNIX socket as a fast connection type.

If we look at the diagram again, we will see that we have a new element - a process manager, which is an intermediary between the web server and application servers. This complicates the scheme somewhat, since you have to configure and maintain more services, but at the same time opens up broader possibilities, allowing you to customize each element of the server specifically for your tasks.

If we look at the diagram again, we will see that we have a new element - a process manager, which is an intermediary between the web server and application servers. This complicates the scheme somewhat, since you have to configure and maintain more services, but at the same time opens up broader possibilities, allowing you to customize each element of the server specifically for your tasks.

In practice, when choosing between a built-in manager and an external one, assess the situation sensibly and choose exactly the tool that best suits your needs. For example, when creating a simple server for several sites on standard engines, the use of an external manager will be clearly unnecessary. Although no one imposes their point of view on you. The good thing about Linux is that everyone can, as if using a construction kit, assemble exactly what they need.

SCGI, PCGI, PSGI, WSGI and others

As you delve into the topic of web development, you will certainly come across references to various CGI technologies, the most popular of which we have listed in the title. You can get confused by such diversity, but if you carefully read the beginning of our article, then you know how CGI and FastCGI work, and, therefore, understanding any of these technologies will not be difficult for you.

Despite the differences in implementations of one or another solution basic principles remain common. All of these technologies provide a gateway interface ( Gateway Interface) for interaction between the web server and the application server. Gateways allow you to decouple the web server and web application environments, allowing you to use any combination without regard to possible incompatibility. Simply put, it doesn't matter whether your web server supports a particular technology or scripting language, as long as it can handle the type of gateway you need.

And since we’ve already listed a whole bunch of abbreviations in the title, let’s go through them in more detail.

SCGI (Simple Common Gateway Interface) - simple common gateway interface- designed as an alternative to CGI and in many ways similar to FastCGI, but easier to implement. Everything we talked about in relation to FastGCI is also true for SCGI.

PCGI (Perl Common Gateway Interface) - Perl library for working with the CGI interface, for a long time was the main option for working with Perl applications via CGI, it has good performance (as far as CGI is concerned) with modest resource requirements and good overload protection.

PSGI (Perl Web Server Gateway Interface) - technology for interaction between a web server and an application server for Perl. If PCGI is a tool for working with a classic CGI interface, then PSGI is more reminiscent of FastCGI. A PSGI server provides an environment for running Perl applications that runs continuously as a service and can communicate with a web server over TCP/IP or UNIX sockets and provides Perl applications with the same benefits as FastCGI.

WSGI (Web Server Gateway Interface) is another specific gateway interface designed for interaction between a web server and an application server for programs written in the Phyton language.

As you can easily see, all the technologies we have listed are, to one degree or another, analogues of CGI/FastCGI, but for specific areas of application. The data we have provided will be quite sufficient for a general understanding of the principle and mechanisms of their operation, and a deeper study of them makes sense only with serious work with these technologies and languages.

Application Server as an Apache Module

If earlier we talked about some abstract web server, now we will talk about a concrete solution and this is not a matter of our preferences. Among web servers, Apache occupies a special place; in most cases, when they talk about a web server on the Linux platform, and indeed about a web server in general, it will be Apache that is meant.

You could say that this is a kind of "default" web server. Take any mass hosting - Apache will be there, take any web application - the default settings are made for Apache.

Yes, from a technological point of view, Apache is not the crown of technology, but it represents the golden mean, it is simple, understandable, flexible in settings, and universal. If you are taking your first steps in website building, then Apache is your choice.

Here we can be reproached that Apache has long been out of date, all the “real guys” have already installed Nginx, etc. etc., so let’s explain at the moment in more detail. All popular CMSs are configured out of the box for use in conjunction with Apache, this allows you to focus all your attention on working with the web application, eliminating the web server as a possible source of problems.

All forums that are popular among beginners also use Apache as a web server, and most of the tips and recommendations will apply specifically to it. At the same time, alternative web servers usually require more subtle and careful configuration, both from the web server and from the web application. At the same time, users of these products are usually much more experienced and typical problems of beginners in their environment are not discussed. As a result, a situation may arise when nothing works and there is no one to ask. This is guaranteed not to happen with Apache.

Actually, what did the Apache developers do that allowed their brainchild to occupy a special place? The answer is quite simple: they went their own way. While CGI proposed abstracting away from specific solutions and focusing on a universal gateway, Apache did it differently - it integrated the web server and application server as much as possible.

Indeed, if we run the application server as a web server module in a common address space, we get a much simpler scheme:

What benefits does this provide? How simpler scheme and the fewer elements it contains, the easier and cheaper it is to maintain and maintain, the fewer points of failure there are. While this may not be so important for a single server, within hosting it is a very significant factor.

What benefits does this provide? How simpler scheme and the fewer elements it contains, the easier and cheaper it is to maintain and maintain, the fewer points of failure there are. While this may not be so important for a single server, within hosting it is a very significant factor.

The second advantage is performance. Again, we will disappoint Nginx fans, thanks to working in a single address space, the performance of the Apache + mod_php application server will always be 10-20% faster than any other web server + FastCGI (or other CGI solution). But you should also remember that the speed of the site is determined not only by the performance of the application server, but also by a number of other conditions in which alternative web servers can show significantly better results.

But there is one more, quite serious advantage: the ability to configure the application server at the level of an individual site or user. Let's go back a little: in FastCGI/CGI schemes, the application server is a separate service, with its own separate settings, which can even work on behalf of a different user or on a different host. From the point of view of the administrator of a single server or some large project, this is great, but for users and hosting administrators, not so much.

The development of the Internet has led to the fact that the number of possible web applications (CMS, scripts, frameworks, etc.) has become very large, and the low barrier to entry has attracted a large number of people without special technical knowledge to website development. At the same time, different web applications could require different settings application server. What should I do? Do you need to contact support every time?

The solution was found quite simple. Since the application server is now part of the web server, you can instruct the latter to manage its settings. Traditionally, to manage Apache settings, in addition to configuration files, httaccess files were used, which allowed users to write their directives there and apply them to the directory where the this file and below, if the settings there are not overlapped by your httaccess file. In mod_php mode, these files also allow you to change many PHP options for an individual site or directory.

To accept the changes, you do not need to restart the web server and in case of an error, only this site (or part of it) will stop working. In addition, make changes to the simple text file and putting it in a folder on the website can be done even by untrained users and is safe for the server as a whole.

The combination of all these advantages is what has given Apache such widespread use and status as a universal web server. Other solutions may be faster, more economical, better, but they always require customization for the task, so they are used mainly in target projects; Apache dominates in the mass segment without any alternative.

Having talked about the advantages, let's move on to the disadvantages. Some of them are simply the other side of the coin. The fact that the application server is part of the web server gives advantages in performance and ease of configuration, but at the same time limits us both in terms of security - the application server always works on behalf of the web server, and in system flexibility, we cannot distribute the web server and application server to different hosts, we cannot use servers with different versions scripting language or different settings.

The second disadvantage is higher resource consumption. In a CGI scheme, the application server generates a page and sends it to the web server, freeing up resources; the Apache + mod_php combination keeps the application server's resources busy until the web server returns the contents of the page to the client. If the client is slow, then the resources will be busy for the entire duration of its service. This is why Nginx is often placed in front of Apache, which plays the role of a fast client; this allows Apache to quickly serve a page and free up resources by shifting interaction with the client to the more economical Nginx.

Conclusion

Cover the entire spectrum in one article modern technologies impossible, so we focused only on the main ones, deliberately leaving some things behind the scenes, and also resorted to significant simplifications. Undoubtedly, when you start working in this area, you will need a deeper study of the topic, but in order to perceive new knowledge you need a certain theoretical foundation, which we tried to lay with this material.

In this article I will try to outline the operating patterns of web servers as broadly as possible. This will help you choose a server or decide which architecture is faster without being based on often biased benchmarks.

In general, the article is a global overview of “what happens.” No numbers.

The article was written based on experience working with servers:

- Apache, Lighttpd, Nginx (in C)

- Tomcat, Jetty (in Java)

- Twisted (Python)

- Erlang OTP (Erlang language)

- and operational Linux systems FreeBSD

However, the principles are general enough that they should apply in some form to Windows OS, Solaris, and a large number of other web servers.

Web Server Purpose

The goal of a web server is simple - to serve a large number of clients simultaneously, using hardware as efficiently as possible. How to do this - this is the main problem and the subject of the article;)

Working with connections

Where does request processing begin? Obviously - from receiving a connection from the user.

To do this, different OSes use different system calls. The most famous and slow on a large number of connections - select. More effective ones are poll, kpoll, epoll.

Modern web servers are gradually abandoning select.

OS optimizations

Even before accepting a connection, optimizations are possible at the OS kernel level. For example, the OS kernel, having received a connection, may not bother the web server until one of the events occurs.

- data has not arrived yet (dataready)

- until the entire HTTP request has arrived (httpready)

At the time of writing, both methods are supported in FreeBSD (ACCEPT_FILTER_HTTP, ACCEPT_FITER_DATA), and only the first is supported in Linux (TCP_DEFER_ACCEPT).

These optimizations allow the server to spend less time waiting for data, thus improving overall performance.

Connection accepted

So, the connection is accepted. Now the main task falls on the shoulders of the server - to process the request and send a response to the visitor. Here we will consider only dynamic queries, which are significantly more complex than returning images.

All servers use an asynchronous approach.

It consists in the fact that request processing is pushed somewhere “to the left” - it is given to an auxiliary process/thread for execution, and the server continues to work and accept and send out all new connections for execution.

Depending on the implementation, the "worker" process may send the entire result back to the server (for subsequent return to the client), may send only a handle to the result to the server (without copying), or may return the result to the client itself.

Basic strategies for working with workers

Working with workers consists of several elements that can be combined in different ways and get different results.

Worker type

There are two main types - process and thread. To improve performance, sometimes both types are used simultaneously, spawning several processes and a bunch of threads in each.

Process

Different workers can be processes. In this case, they do not interact with each other, and the data of different workers is completely independent of each other.

Flow

Threads, unlike processes, have common, shared data structures. The worker's code must implement access synchronization so that simultaneous writing to the same structure does not lead to chaos.

Address space

Each process, including the server, has its own address space, which it uses to separate data.

Inside the server

When working inside the server, the worker has access to server data. It can change any structure and do all sorts of nasty things, especially if it is written with errors.

The advantage is that there is no transfer of data from one address space to another.

Outside the server

Worker can be launched completely independently of the server and receive data for processing using a special protocol (for example FastCGI).

Of course, this option is the safest for the server. But it requires extra work by forwarding the request - the result between the server and the worker.

The birth of workers

To process many connections simultaneously, you need to have a sufficient number of workers.

There are two main strategies.

Statics

The number of workers can be strictly fixed. For example, 20 work processes in total. If all the workers are busy and the 21st request arrives, the server issues the Temporary Unavailable code - “temporarily unavailable”.

Dynamics

For more flexible resource management, workers can be generated dynamically, depending on the load. The worker generation algorithm can be parameterized, for example (Apache pre-fork), like this:

- Minimum number of available workers = 5

- Maximum number of available workers = 20

- Total workers no more than = 30

- Initial number of workers = 10

Cleaning between requests

Workers can either reinitialize themselves between requests, or simply process requests one after another.

Clean

Before each request, it is cleared of what was before, clears internal variables, etc.

As a result, there are no problems or errors associated with using variables left over from the old query.

Persistent

No state clearing. The result is resource savings.

Analysis of typical configurations

Let's see how these combinations work on the example of different servers.Apache (pre-fork MPM) + mod_php

For processing dynamic queries a php module running in the server context is used.- Process

- Inside the server

- Dynamics

- Clean

Apache (worker MPM) + mod_php

To process dynamic requests, the php module running in the server context is used.

At the same time, since php works in the server's address space, the data shared by the streams periodically gets corrupted, so the connection is unstable and is not recommended. This is due to bugs in mod_php, which includes the PHP core and various PHP modules.

An error in a module, thanks to one address space, can bring down the entire server.

- Flow

- Inside the server

- Dynamics

- Clean

Apache (event mpm) + mod_php

Event MPM is a strategy for working with workers that only Apache uses. Everything is exactly the same as with regular threads, but with a small addition for handling Keep-Alive

The Keep-Alive setting allows the client to send many requests in one connection. For example, get a web page and 20 pictures. Typically, the worker finishes processing the request - and waits for some time (keep-alive time) to see if additional requests will follow on this connection. That is, it just hangs in the memory.

Event MPM creates an additional thread that takes over waiting for all Keep-Alive requests, freeing up the worker for other useful tasks. As a result, the total number of workers is significantly reduced, because no one is now waiting for clients, and everyone is working.

- Flow

- Inside the server

- Dynamics

- Clean

Apache + mod_perl

A special feature of the Apache connection with mod_perl is the ability to call Perl procedures while Apache is processing a request.

Due to the fact that mod_perl works in the same address space as the server, it can register its procedures through Apache hooks at different stages of the server's operation.

For example, you can work at the same stage as mod_rewrite, rewriting the URL in the PerlTransHandler hook.

The following example describes rewrite from /example to /passed, but in Perl.

# in the Apache config with mod_perl PerlModule enabled MyPackage::Example PerlTransHandler MyPackage::Example # in the file MyPackage/Example.pm package MyPackage::Example use Apache::Constants qw(DECLINED); use strict; sub handler ( my $r = shift; $r->uri("/passed") if $r->uri == "/example" return DECLINED; ) 1;

Unfortunately, mod_perl is very heavy in itself, so using it only with rewrites is very expensive.

Unlike mod_php, the pearl module is persistent, that is, it does not reinitialize itself every time. This is convenient because it frees you from having to reload a large pack of modules before each request.

- Process/thread - MPM dependent

- Inside the server

- Dynamics

- Persistent

Twisted

This asynchronous server is written in Python. Its peculiarity is that the web application programmer himself creates additional workers and gives them tasks. # example code on the server twisted # long function, processing the request def do_something_big(data): .... # while processing the request d = deferToThread(do_something_big, "parameters") # bind callbacks to the result do_something_big d.addCallback(handleOK) # .. and to the error when executing do_something_big d.addErrback(handleError)Here, the programmer, upon receiving a request, uses a call to deferToThread to create a separate thread that is tasked with executing the do_something_big function. If do_something_big is completed successfully, the handleOK function will be executed; if there is an error, handleError will be executed.

And the current flow will continue at this time normal processing connections.

Everything happens in a single address space, so all workers can share, for example, the same array with users. This makes it easy to write multi-user chat-type applications in Twisted.

- Flow

- Inside the server

- Dynamics

- Persistent

Tomcat, Servlets

Servlets are a classic example of threaded web applications. A single Java application code runs on multiple threads. Synchronization is mandatory and must be performed by the programmer.

- Flow

- Inside the server

- Dynamics

- Persistent

FastCGI

FastCGI is an interface for communication between a web server and external workers, which are usually launched as processes. The server transmits environment variables, headers and body of the request in a special (non-HTTP) format, and the worker returns a response.

There are two ways to spawn such workers.

- Integrated with server

- Separate from the server

In the first case, the server itself creates external worker processes and manages their number.

In the second case, to spawn worker processes, a separate “spawner” is used, a second, additional server that can communicate only via the FastCGI protocol and manage workers. Typically a spawner spawns workers as processes rather than threads. Dynamic/Static is determined by the spawner settings, and Pure/Persistent is determined by the characteristics of the workflow.

Ways to work with FastCGI

There are two ways to work with FastCGI. The first method is the simplest, it is used by Apache.

receive the request -> submit it for processing in FastCGI -> wait for an answer-> give the answer to the client.

The second method is using servers like lighttpd/nginx/litespeed/etc.

receive the request -> submit it for processing in FastCGI -> process other clients-> give the answer to the client when he arrives.

This difference allows Lighttpd + fastcgi to work more efficiently than Apache does, because while the Apache process is waiting, Lighttpd has time to service other connections.

FastCGI operating modes

FastCGI has two modes of operation.- Responder - normal mode, when FastCGI accepts a request and variables, and returns a response

- Authorizer - mode when FastCGI allows or denies access as a response. Convenient for monitoring closed static files

Both modes are not supported on all servers. For example, in the Lighttpd server, both are supported.

FastCGI PHP vs PERL

The PHP interpreter clears itself every time before processing the script, and Perl simply processes requests one by one in a loop like:

Connect modules; while (a request has arrived) (process it; print answer;) Therefore, Perl-FastCGI is much more efficient where the majority of execution time is taken up by include helper modules.

Resume

The article discusses general structure request processing and types of workers. In addition, we looked into Apache Event MPM and how to work with FastCGI, looked at servlets and Twisted.

I hope this overview will serve as a starting point for choosing a server architecture for your web application.

If you use a computer that is connected to the network every day, if your mobile gadget is also connected to the Internet, then every user from time to time comes across the word “server”. Moreover, this word can appear in different combinations, and not every user understands what we're talking about. What is hidden behind the word “server”, and why do users need it?

The term “server” can mean a hardware device and software for it (hardware and virtual). A hardware server is separate computer. It is needed to ensure the operation of other PCs and office equipment. A virtual server is software. In this case, a specific server combines these two types.

The first thing to remember is that its job is to maintain the network and users, not to manage the network. Users themselves pose tasks to the server, and it quickly solves them. The better the server, for example, such as HP servers, the better it performs its duties.

It is difficult to imagine the work of large companies that have a lot of electronic equipment installed without combining all these devices into one network. A server in an enterprise allows you to remotely control office equipment and allows PCs to interact with each other.

Server failure or malfunction can result in disaster

In enterprises, servers make it possible to optimize the work of all departments. But also in everyday life We often encounter the work of servers. In particular, tellers at cash desks and banks use a server to print documents and make payments. The server supports the work of all mailers, social networks and communication managers.

The server provides access to the Internet. All sites are stored on servers. This provides shared hosting. This service is provided by hosting companies.